RAID with Power Awareness

Redundancy is a key feature of most enterprise storage solutions. Mechanisms like RAID play a key role in providing different levels of redundancy. In such systems, scheduling redundancy-related operations could be exploited for power management.

Energy-efficient redundant and inexpensive disk array (EERAID) is a RAID engine aimed at minimizing the energy consumption of RAID disks by adaptively scheduling requests to various disks that form the RAID group. Specifically, by controlling the mapping of logical requests to a RAID stripe, the disk idle period of a subset of disks is maximized facilitating the spin down of these disks. For RAID 1, a windowed round-robin scheduler that dispatches a window of requests to one RAID disk before switching to the other RAID disks - and vice versa - can be used. For RAID 5, a transformable read scan be used. The main idea is that for a read request of a stripe that is currently on spun down disk, the stripe is reconstructed using other data blocks and parity blocks. Further, a power-aware de-stage algorithm is proposed to accommodate write requests in the design of EERAID.

Power-aware redundant array of inexpensive disks (PARAID) dynamically varies the number of powered-on disks to satisfy this varying load. In addition, to tackle the problem of high penalties due to requests for data on spun down disks, PARAID maintains a skewed data layout. Specifically, free space on active and idle disks is used to store redundant copies of data that are present on spun down disks. A gear is characterized by a number of active and idle disks, and a gear upshift amounts to an increasing number of powered-on disks to cope with increased performance demand and similarly a gear downshift amounts to spinning down additional drives in response to a reduction in system load. The prototype built on a Linux software RAID driver shows a power savings of about 34% as compared to a power-unaware RAID 5.

Hibernator is a disk array design for optimizing storage power consumption. It assumes the availability of multispeed disks and tries to dynamically create and maintain multiple layers of disks, each at a different rotational speed. Based on performance, the number of disks in each layer and the speed of the disks themselves are adjusted. A disk speed determination algorithm and efficient mechanisms for exchange of data between various layers are used. Based on trace-driven simulations, an energy savings of about 65% for file system-based workloads can be realized. An emulated system with a DB2 transaction-processing engine showed an energy savings of about 29%.

Power-Aware Data Layout

Controlling disk access by optimizing data layouts is another way of skewing a disk access pattern (i.e. changing a disk's idle periods). The technique called popular data concentration (PDC) works by classifying the data based on file popularity and then migrating the most popular files to a subset of disks, thereby increasing the idle periods of the remaining disks. Maximizing idle time helps in making more transitions to standby state, and hence more power can be conserved. One limitation of this approach is that access of unpopular files could potentially involve turning on or spinning up a disk, which could typically take about 8-12 seconds before actual data access can be made.

MAID is another technique that involves data migration. Unlike PDC, MAID tries to copy files based on their temporal locality. MAID uses a small subset of disks as dedicated cache disks and uses traditional methods to exploit temporal locality. The remaining disks are turned on on-demand. However, this scheme also suffers from the fact that files that have not been accessed in the recent past could potentially have a retrieval time totalling tens of seconds. Due to this high performance penalty, MAID is more suited for disk-based archival or backup solutions. Copan Systems' archival data storage systems use this technique and outperform traditional archival and backup products in terms of both performance and power consumption.

The limitation of applicability of MAID to online storage systems can be overcome by the technique called GreenStore which uses application-generated hints to better manage the MAID cache. Cache misses are minimized by making use of application hints, thereby making this solution more suitable for online environments. This opportunistic scheme for application hint scheduling consumes up to 40% less energy compared to traditional non-MAID storage solutions, whereas the use of standard schemes for scheduling application hints on typical MAID systems is able to achieve energy savings of only about 25% compared to non-MAID storage.

Hierarchical Storage Management

HSM, also called tiered storage, is a way to manage data layout, and is widely used in industry. It is implemented in storage systems by IBM, VERITAS, Sun, EMC, Quantum, CommVault and others. In HSM, data are migrated between different storage tiers based on data access patterns. Different storage tiers have significant differences in one or more attributes - namely, price, performance, capacity, power and function. HSM monitors the access pattern of the data, predicts the future usage pattern of the data, stores the bulk of cold data on slower devices (e.g. tapes) and copies the data to faster devices (hard disks) when the data become hot. The faster devices act as the caches of slower devices. HSM is typically transparent to the user, and the user does not need to know where the data are stored and how to get the data.

One example of two-stage HSM is that frequently accessed data are stored on hard disks and rarely accessed data are stored on tapes. Data are migrated to a tape if they are not accessed for a threshold of time, and are moved back to a hard disk again upon access. The data movement is automatic without the user's intervention.

The FC disks, SATA disks and tapes can form a three-stage HSM. If the data become cold (i.e. they are not accessed for a period of time), it will first be migrated from highspeed and high-cost FC disks to lower-speed but lower-cost SATA drives, and will finally be moved from SATA disks to tapes that are even much slower and cheaper than SATA if the data are not used for a longer period of time.

Moving data from hard disks to tapes, or from FC disks to SATA disks and then to tapes, can not only reduce the storage costs but also reduce the power consumption of the storage system by storing rarely accessed data to low-power devices.

An emerging trend is the integration of SSDs into the storage hierarchy. In HSM consisting of SSDs and disks, hard disk power management techniques like state transitioning can also be integrated for power saving. Specifically, by using the SSD tier above the hard disk tier as a layer of large cache, hard disk idle periods can be extended and access to disks can be optimized. If an SSD can be used for caching the most frequently accessed files and the first portion of all the other files, the hard disks can be put into standby mode most of the time to save energy. The most frequently accessed data will be accessed from SSD. This will provide not only better energy saving but also faster access time. When a file stored on hard disk is accessed, the first portion of the data can be accessed from SSD. This will buy some time for the hard disk to spin up from standby into active mode.

Storage Virtualization

Storage virtualization is another key strategy for reducing storage power consumption. With storage virtualization, access to storage can be consolidated into fewer number of physical storage devices, which reduces storage hardware costs as well as energy costs. Moreover, those devices will have less idle periods due to workload consolidation, which greatly improves the energy efficiency of storage systems.

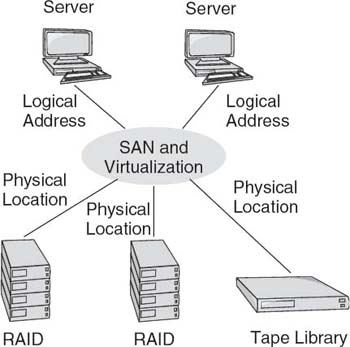

Storage virtualization is commonly used in data centre storage and for managing multiple network storage devices, especially in a storage area network (SAN). It creates a layer of abstraction or logical storage between the hosts and the physical storage devices, so that the management of storage systems becomes easier and more flexible by disguising storage systems' actual complexity and heterogeneous structure. Logical storage is created from the storage pools, which are the aggregation of physical storage devices. The virtualization process is transparent to the user. It presents a logical space to the user and handles the mapping between the physical devices and the logical space. The mapping information is usually stored in a mapping table as metadata. Upon an I/O request, these metadata will be retrieved to convert the logical address to the physical disk location. An example of storage virtualization.

Virtualization makes management easier by presenting to the user a single monolithic logical device from a central console, instead of multiple devices that may be heterogeneous and scattered over a network. It enables non-disruptive online data migration by hiding the actual storage location from the host. Changing the physical location of data can be done concurrently with on-going I/O requests.

Virtualization also increases storage utilization by allowing multiple hosts to share a single storage device, and by data migration. The improved utilization results in a reduction of physical storage devices, and fewer devices usually mean less power consumption. Furthermore, since more workloads go to a single device due to the improved utilization, each device becomes more energy efficient due to less and shorter idle periods.

Cloud Storage

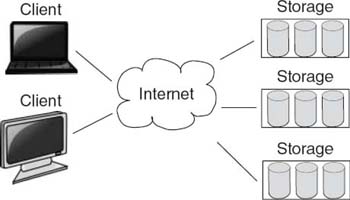

Cloud storage refers to online storage generally offered by third parties, instead of storing data to the local storage devices. Those third parties, or hosting parties, usually host multiple data servers (storages) which form the data centres. The user stores or accesses data to or from the data servers using the Internet through a Web-based interface and will pay the cloud storage provider for the storage capacity that he or she uses. In general, the fee charged by the service providers is much less than the costs of maintaining local storage for most individual users, small and medium-size companies and even enterprises.

IT and energy costs are reduced because the user does not need to buy and manage his or her own local physical storage devices, perform storage maintenance like replication and backup, prevent over-provisioning, worry about running out of storage space and so on. All of these complex and tedious tasks are offloaded to the cloud storage provider. The convenience, flexibility and ease of management provided by cloud storage, as well as the affordable costs, make cloud storage very attractive and increasingly popular.

Cloud storage relieves local IT administrators from complex storage power management tasks by offloading them to the cloud service provider. The service provider can use sophisticated techniques for minimizing power consumption by storage systems. For example, with a large data footprint, a large amount of storage devices and different workloads from different users, the service provider can lay out the data in a power-aware way or organize the data using HSM. The provider can also use storage virtualization to consolidate the workloads from different users to a single storage device to improve storage efficiency and reduce the device's idle time. The concept of cloud storage provides great opportunities for improving storage efficiency and forming greener storage compared to traditional local storage, although security and reliability are still major issues.

Taken from : Harnessing Green IT: Principles and Practices

Google brain is working in the Big data implementation services to make it a huge success for the world. We hope that society will soon use AI devices at a reasonable cost.

ReplyDeleteFantastic insights on system-level energy management! It's clear that as technology advances, the need for efficient energy management at the system level becomes increasingly paramount.

ReplyDeleteI appreciate how you've highlighted the potential for significant cost savings through optimized energy consumption. Implementing system-level strategies not only benefits the bottom line but also contributes significantly to sustainability goals.

The mention of real-time monitoring and adaptive control is particularly intriguing. It's impressive to see how modern solutions are leveraging data to make informed decisions, ultimately leading to more resource-efficient operations.

As we navigate a world with growing energy demands, understanding and implementing robust system-level energy management will undoubtedly be a game-changer. Looking forward to more insights and perhaps some case studies showcasing successful implementations!

Cheers to a future of smarter, more sustainable energy practices!

Great insights into system-level energy management ! The intersection of technology and sustainability is truly fascinating. I appreciate the emphasis on optimizing energy consumption at a holistic level. It's evident that a well-implemented system-level approach can lead to significant efficiency gains and environmental benefits. I'm curious to know more about specific case studies or practical applications. Are there any success stories you could share? Looking forward to reading more on this impactful topic!

ReplyDelete